Kafka Unveiled

- Published on

- Authors

- Author

- Ram Simran G

- twitter @rgarimella0124

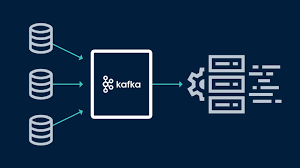

In the bustling corridors of LinkedIn back in the early 2010s, a group of engineers faced a challenge that would soon be common across the tech industry: how to efficiently handle massive streams of real-time data. Their solution, Apache Kafka, would transcend its origins to become one of the most critical components in modern data architecture.

Apache Kafka isn’t just another message broker—it’s a distributed event streaming platform that has fundamentally changed how organizations handle data at scale. Today, we’ll dive deep into what makes Kafka unique, how it works, and why it has become indispensable for companies ranging from Netflix to Uber.

What Exactly Is Apache Kafka?

At its core, Kafka is a distributed event store and streaming platform designed to handle high-throughput, fault-tolerant workloads. Unlike traditional message queues that simply pass messages between systems, Kafka creates a persistent, replicated log of events that can be consumed multiple times by different applications.

Think of Kafka as a super-powered digital journal. Every event that occurs—whether it’s a user click, a sensor reading, or a transaction—gets recorded in order. What makes this journal special is that multiple readers can access it simultaneously, each keeping track of their own position without affecting others.

The Building Blocks of Kafka

Messages: The Atomic Units

The foundation of Kafka is the message (also called a record). Each message consists of three key components:

- Headers: Metadata that helps route the message to the right destination

- Key: Determines which partition the message lands in (important for ordering)

- Value: The actual payload—the data you’re sending

Messages are compact and efficient, designed to move at high speeds across distributed systems with minimal overhead.

Topics: Categorizing the Data Flow

In Kafka’s world, messages are organized into topics. You can think of topics as dedicated channels for specific types of data—separate streams for user activity, application logs, transaction events, and so on.

Each topic can be configured differently depending on the needs of your data. Some might prioritize durability, others speed, but all provide the same core functionality of ordering and persistence.

Partitions: The Secret to Kafka’s Scalability

Topics are divided into partitions, which are perhaps the most ingenious aspect of Kafka’s design. Each partition is an ordered, immutable sequence of messages that is continually appended to. Messages within a partition are assigned a sequential ID number called an offset.

Partitioning serves two critical purposes:

- It allows Kafka to scale horizontally across multiple servers

- It enables parallel processing by multiple consumers

When you see a topic with multiple partitions, think of it as several parallel queues working together to handle what would otherwise be an overwhelming stream of data.

The Kafka Ecosystem: Players and Their Roles

Brokers: The Foundation of the Cluster

A Kafka broker is simply a server that’s part of the Kafka cluster. Each broker hosts some subset of partitions and handles requests from producers and consumers. The beauty of Kafka’s design is that brokers work together seamlessly—if one fails, others take over its responsibilities.

A cluster typically consists of multiple brokers for redundancy and load distribution. This arrangement ensures no single point of failure exists in the system.

Producers: The Data Creators

Producers are applications that create new messages and publish them to Kafka topics. They’re responsible for choosing which topic and partition each message goes to.

Producers can work in various modes:

- Asynchronous: Fire and forget

- Synchronous: Wait for acknowledgment

- Idempotent: Ensure exactly-once delivery

The flexibility of producer configurations allows developers to make appropriate trade-offs between performance and reliability based on their specific use cases.

Consumers: The Data Processors

Consumers read messages from topics, processing the stream of events according to their application logic. What makes Kafka consumers special is their ability to maintain their own position (offset) in the stream, enabling them to pick up exactly where they left off after a restart.

Consumers often work in consumer groups, where multiple instances collaborate to process different partitions of the same topic. This design enables horizontal scaling of processing and provides fault tolerance—if one consumer fails, others in the group can take over its workload.

How Kafka Works End-to-End

The end-to-end flow of data through Kafka follows these steps:

Cluster Setup: A Kafka cluster is established with multiple broker servers.

Topic Creation: Topics are created and configured with the appropriate number of partitions based on throughput needs and replication factor for fault tolerance.

Message Production: Producer applications send messages to specific topics. Each message gets assigned to a partition (either randomly or based on a key) and appended to the end of that partition.

Message Storage: Kafka stores messages on disk, keeping them for a configured retention period (which can be based on time or size). This persistence is central to Kafka’s durability.

Message Consumption: Consumer applications read messages from partitions, processing them according to their business logic. Each consumer keeps track of which messages it has already processed by maintaining its current offset.

Fault Handling: If a broker fails, leadership for its partitions transfers to other brokers. If a consumer fails, its workload is redistributed among other consumers in its group.

What Makes Kafka Stand Out?

Kafka offers several advantages that have contributed to its widespread adoption:

High Throughput and Low Latency

Kafka can handle millions of messages per second with minimal latency, making it suitable for real-time data processing at scale. This performance comes from its efficient design, including sequential disk I/O and zero-copy data transfer.

Durability and Fault Tolerance

By replicating data across multiple brokers, Kafka ensures that your messages survive even if individual servers fail. This replication, combined with leader-follower partition management, creates a robust system that can maintain availability through various failure scenarios.

Horizontal Scalability

Need to handle more data? Simply add more brokers to your Kafka cluster. The ability to scale horizontally means Kafka can grow with your needs without requiring a complete redesign of your architecture.

Message Retention

Unlike many message brokers that delete messages as soon as they’re consumed, Kafka retains messages for a configurable period. This retention enables multiple applications to process the same data independently and supports reprocessing scenarios.

Exactly-Once Semantics

For critical workflows, Kafka provides the option for exactly-once processing semantics, ensuring that each message is processed precisely one time, no more and no less.

Real-World Applications

Kafka’s versatility has led to its adoption across numerous industries and use cases:

Log Aggregation

Collecting logs from multiple services into a centralized location for analysis is one of Kafka’s most common use cases. This approach simplifies monitoring and troubleshooting in distributed systems.

Event Sourcing

Kafka’s append-only log makes it perfect for event sourcing architectures, where the state of a system is determined by a sequence of events rather than just the current state.

Stream Processing

When combined with processing frameworks like Kafka Streams or Apache Flink, Kafka enables real-time data transformation and analysis, powering dashboards, alerts, and automated responses.

Change Data Capture

By capturing database changes as events, Kafka facilitates data synchronization across different systems and enables real-time data warehousing.

System Monitoring and Alerting

With its ability to process high volumes of metrics in real-time, Kafka serves as an excellent backbone for monitoring systems, helping detect anomalies and trigger alerts when necessary.

Getting Started with Kafka

Setting up a basic Kafka environment involves:

Install Kafka: Download and install Kafka, which includes ZooKeeper (or use a managed service).

Start the Servers: Launch ZooKeeper first, then start the Kafka broker(s).

Create a Topic: Use the command-line tools to create a topic with an appropriate number of partitions.

Write Producer Code: Implement a simple producer to send messages to your topic.

Write Consumer Code: Create a consumer that reads and processes those messages.

From there, you can expand your Kafka implementation by adding more brokers, implementing more sophisticated producers and consumers, and integrating with other systems through Kafka Connect.

Common Challenges and Solutions

Configuration Complexity

With great power comes great configuration complexity. Kafka has numerous settings that can significantly impact its behavior and performance. Start with sensible defaults and adjust based on your specific needs, measuring the impact of each change.

Monitoring and Operations

Operating a Kafka cluster requires monitoring various metrics, from consumer lag to broker disk usage. Tools like Prometheus, Grafana, and dedicated Kafka management interfaces can help maintain visibility into your system’s health.

Data Governance

As Kafka becomes central to your data architecture, questions about data ownership, schema evolution, and compliance become increasingly important. Consider implementing a schema registry and clear data governance policies from the start.

Conclusion: The Future is Streaming

Apache Kafka has fundamentally changed how we think about data movement in modern architectures. By decoupling data producers from consumers and providing a scalable, fault-tolerant backbone for real-time data streams, Kafka enables more responsive, resilient, and scalable systems.

Whether you’re building a microservice architecture, creating a real-time analytics pipeline, or modernizing legacy systems through event-driven design, Kafka provides a battle-tested foundation for your streaming data needs.

As we move further into the age of real-time data, Kafka’s importance will only continue to grow. Organizations that master this technology gain a significant competitive advantage through improved responsiveness, better integration between systems, and the ability to extract value from data the moment it’s created.

Cheers,

Sim